Publications

C4DT FOCUS 3 Hacking in times of crises

Omniledger

A fast and efficient blockchain created by the lab of prof. Bryan Ford.

Observer 5 The Challenges of Cryptofinance

test.vim: running tests the easy way

As we all know, writing tests when developing software is very important¹. Indeed, most modern programming environments have frameworks to write and run tests, sometimes even in the standard tooling: pytest for Python, cargo test for Rust, go test for Go, etc. Let’s set aside the actual writing of the…

The SwissCovid App

In response to the COVID-19 disease that has stormed the world since early 2020, many countries launched initiatives seeking to help contact tracing by leveraging the mobile devices people carry with them. The Federal Office of Public Health (FOPH) commissioned the effort for Switzerland, which resulted in the official SwissCovid…

Cross-platform network programming in wasm/libc

I’m still working on my fledger project. It’s goal is to create a node for a decentralized system directly in the browser. For this I want the following: Works in the browser or with a CLI: have a common codebase but use different network implementations Direct browser to browser communication:…

Shoup on Proof of History

For a long time I tried to understand what Proof-of-History brings to the table. What is it useful for? What problem does it solve? At the beginning of may 2022, Victor Shoup, who is a renowned cryptographer and currently working at DFinity, took a deep dive into Proof of History.…

Lightarti – a lightweight Tor library

Lightarti is a mobile library developed in Rust, in collaboration with the SPRING lab at EPFL, the Tor team, and the original Arti library team.

Disco

Disco is a framework to implement machine learning algorithms that run in a browser. This allows testing new privacy-preserving decentralized ML algorithms.

Magic-Wormhole: communicate a secret easily

The problem Here is a common scenario we have all run into: you need to communicate some piece of secret information, say a password, to another person. Perhaps it’s on-boarding a new colleague, or to allow access for a partner. But you don’t want to compromise this secret by transmitting…

Tandem

The Tandem / Monero project is a collaboration with Kudelski. It secures private keys in a privacy-preserving way.

Observer 4 Enabling data based medical research while preserving patients’ privacy

Observer 3 Zero-trust cloud weekdata protection in the cloud

`derive_builder`: usage and limitations

Basics The builder pattern is a well known coding pattern. It helps with object construction by having a dedicated structure to help build the other. It is usually used when many arguments are required to build one. The example codes are written in Rust, but the concepts behind these can…

The Future of Privacy and Data in Wartime (with Chelsea Manning and Carmela Troncoso)

Observer 2 Should We Trust Digital Immunity Passports?

How to read your bank-account on a public blockchain?

One of the ways public blockchains are touted is that they can replace your bank account. The idea is that you don’t need a central system anymore, but can open any number of accounts, as needed. However, as there is no central place, it is sometimes difficult to know how…

Fun with microcontrollers

Today it’s something about actual hardware, not just software. For Christmas I took a long LED-strip and hooked it up to an Arduino One to create some animations. But not having WiFi was a bit of a shame, because this meant you couldn’t control it from a smartphone. So I…

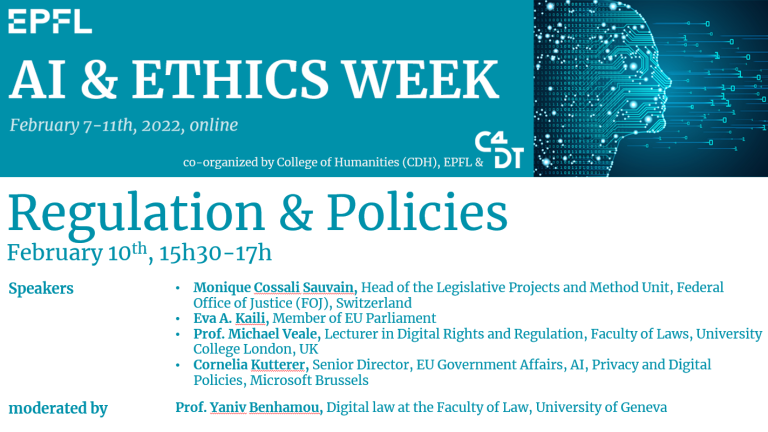

AI & Ethics Week, Session “Regulation & Policies”

AI & Ethics Week, Session “Research”

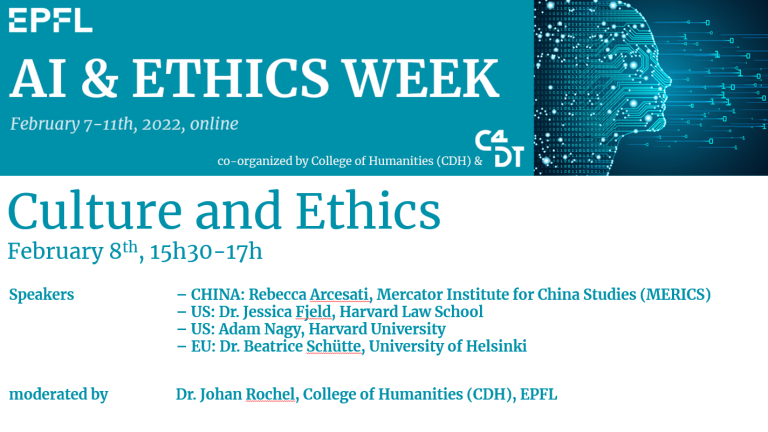

AI & Ethics Week, Session “Culture and Ethics”

AI & Ethics Week, Session “Culture & Ethics”

AI & Ethics Week, Session “Business Impact”